Adding public CI/CD to a Node project with Azure Pipelines

I recently created ’unanet-summarizer’, a small utility to give my colleagues some additional summary information on their time sheets. It got a little more attention than I expected, but best of all it got others wanting to help out, and the codebase grew out rapidly.

It was time for a build and deployment system, and I’m in love with Azure DevOps so I wanted to take this opportunity to write up the process and document it for my colleagues and others.

Goals

I wanted to achieve the following for this JS project:

- A build that runs as part of every pull request so we can detect any broken changes

- A production release that outputs artifacts to a hosting location (in this case, a simple storage blob on Azure)

- Status badges for builds and releases

- I want anyone to be able to view the builds and deployments

The Walkthrough

What follows below is a full walkthrough, complete with some struggles, because I want it to be clear when you might miss things or run into confusing steps.

Setting up the Project

- I go to http://dev.azure.com and sign in with my Excella account.

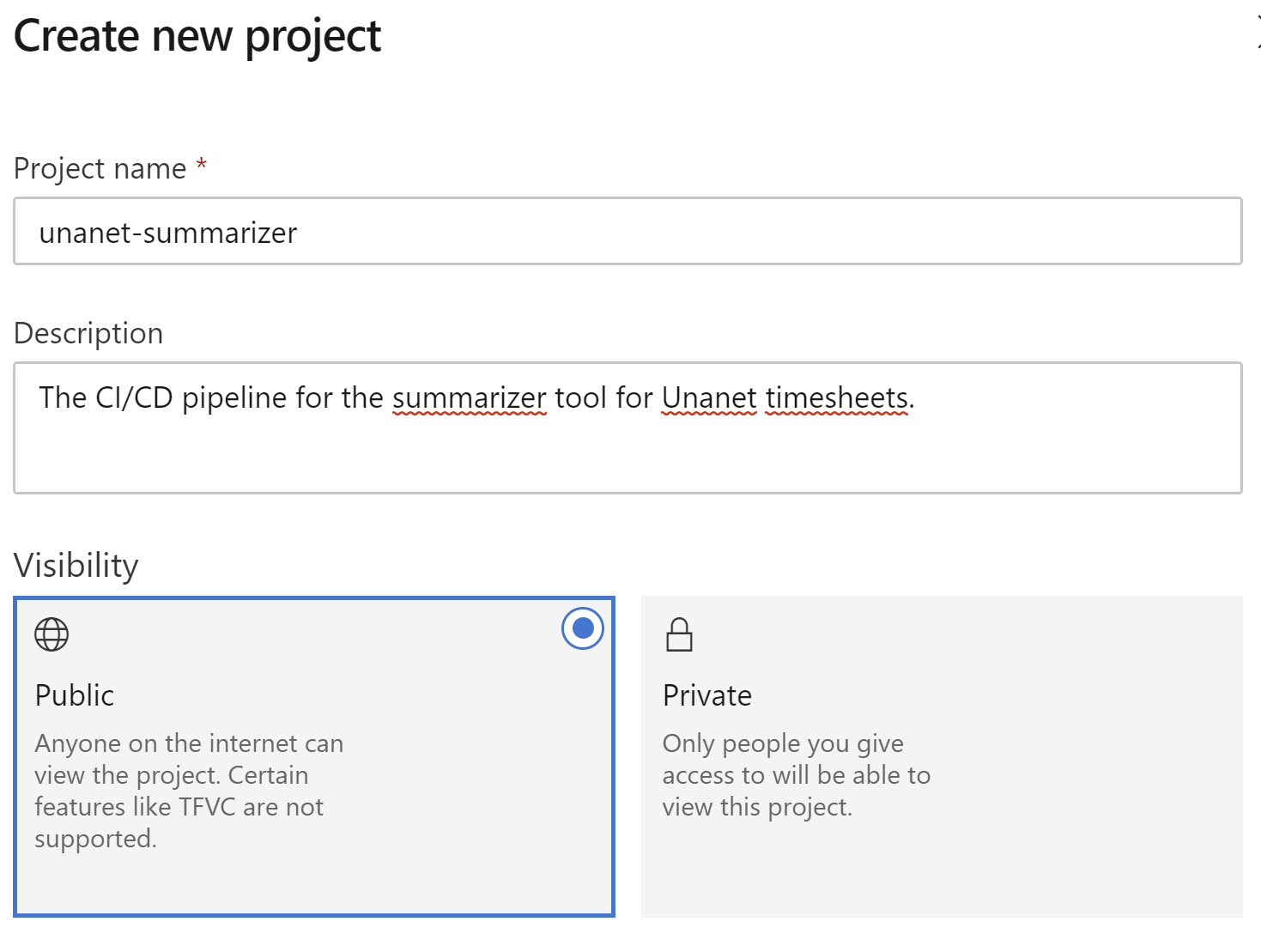

- I create a new project:

- I give it a name and select the options, keeping it public so that anyone will be able to view the builds & releases:

- In the left navigation, I click pipelines, which tells me (unsurprisingly) that no pipelines exist. I click to create one:

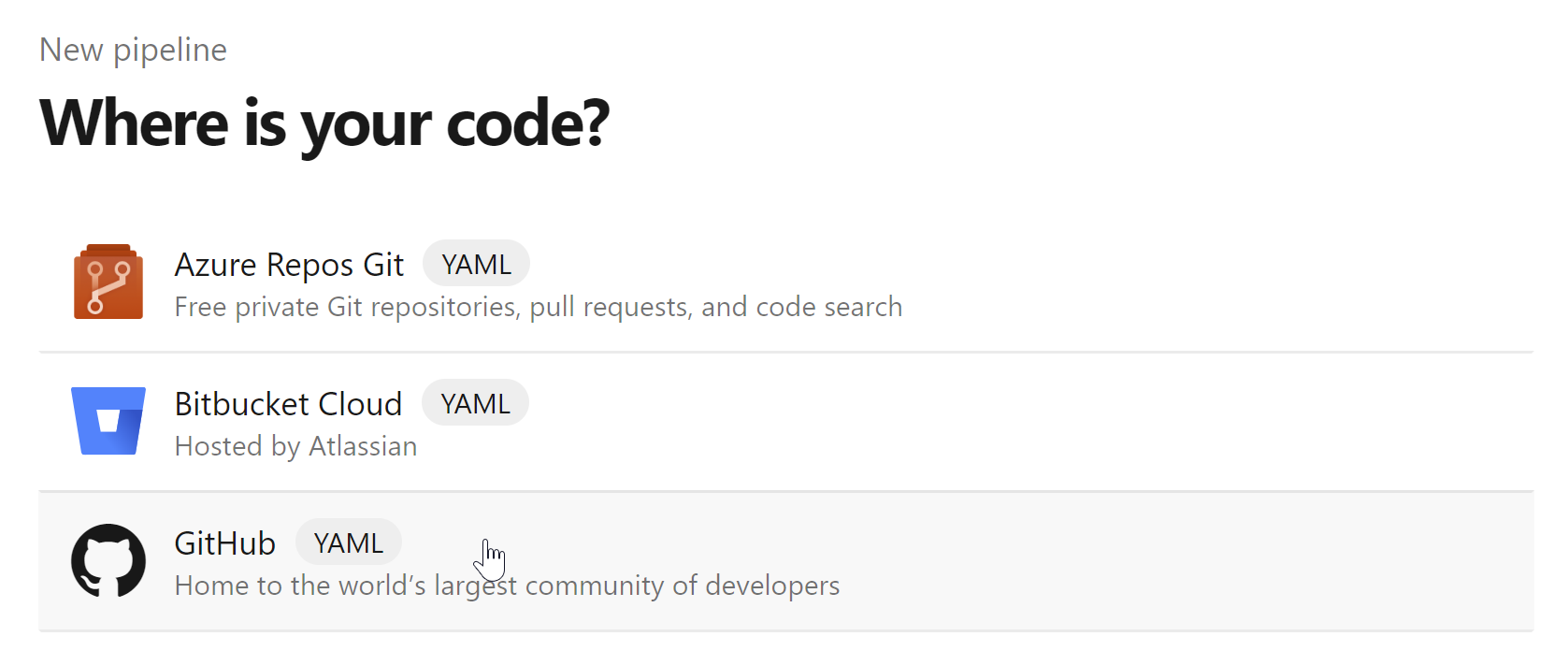

- I select GitHub for the location of the code:

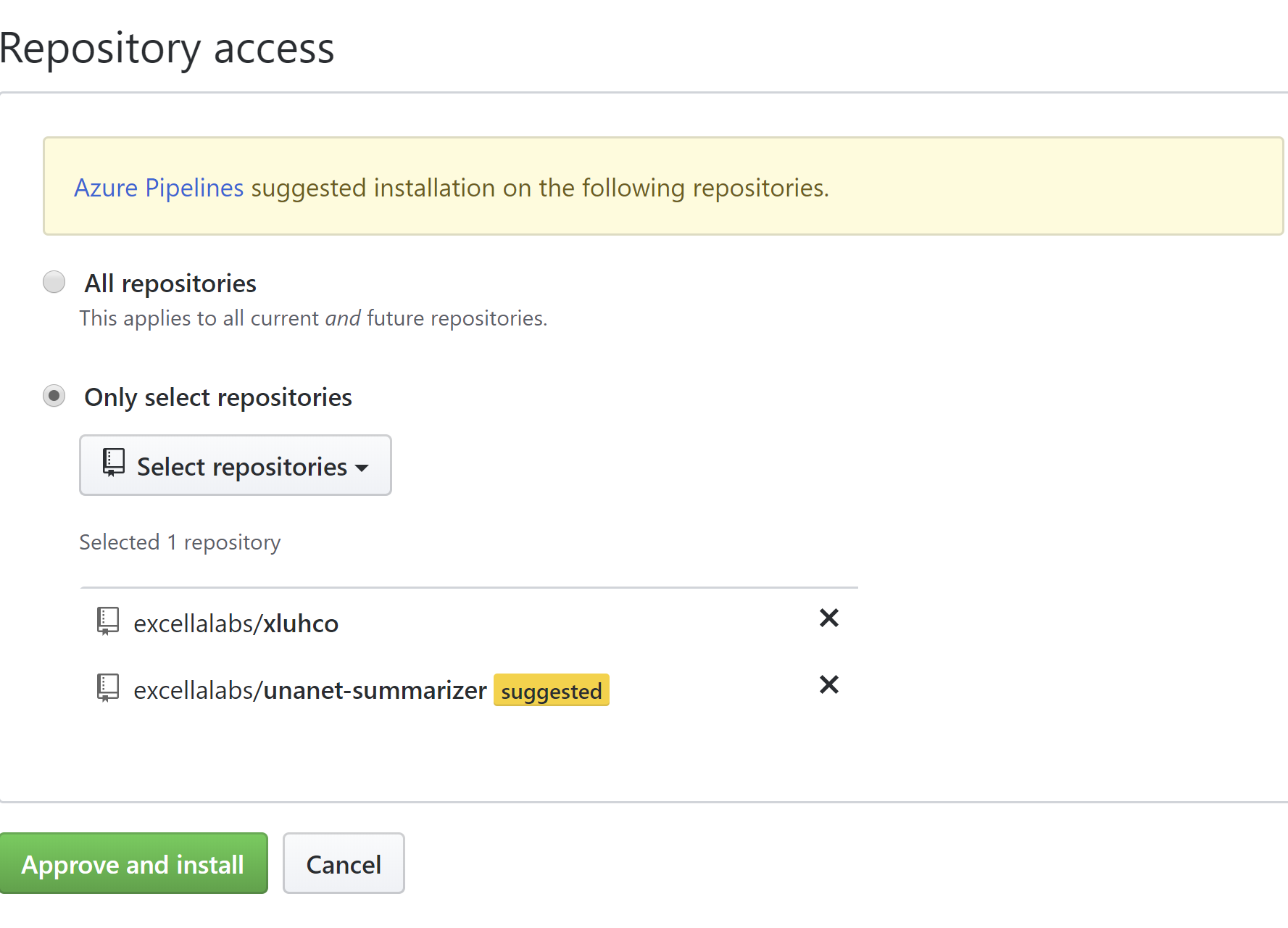

- I select all repositories from the dropdown (since it’s not my repo but rather

excellalabs). I then search for unanet and click the summarizer project.

- I authenticate with GitHub

- In GitHub, I am then asked to give permission for the Azure Pipelines app to access the repo. I approve. 👍

- I am then asked to authenticate with my Excella account again. No idea why.

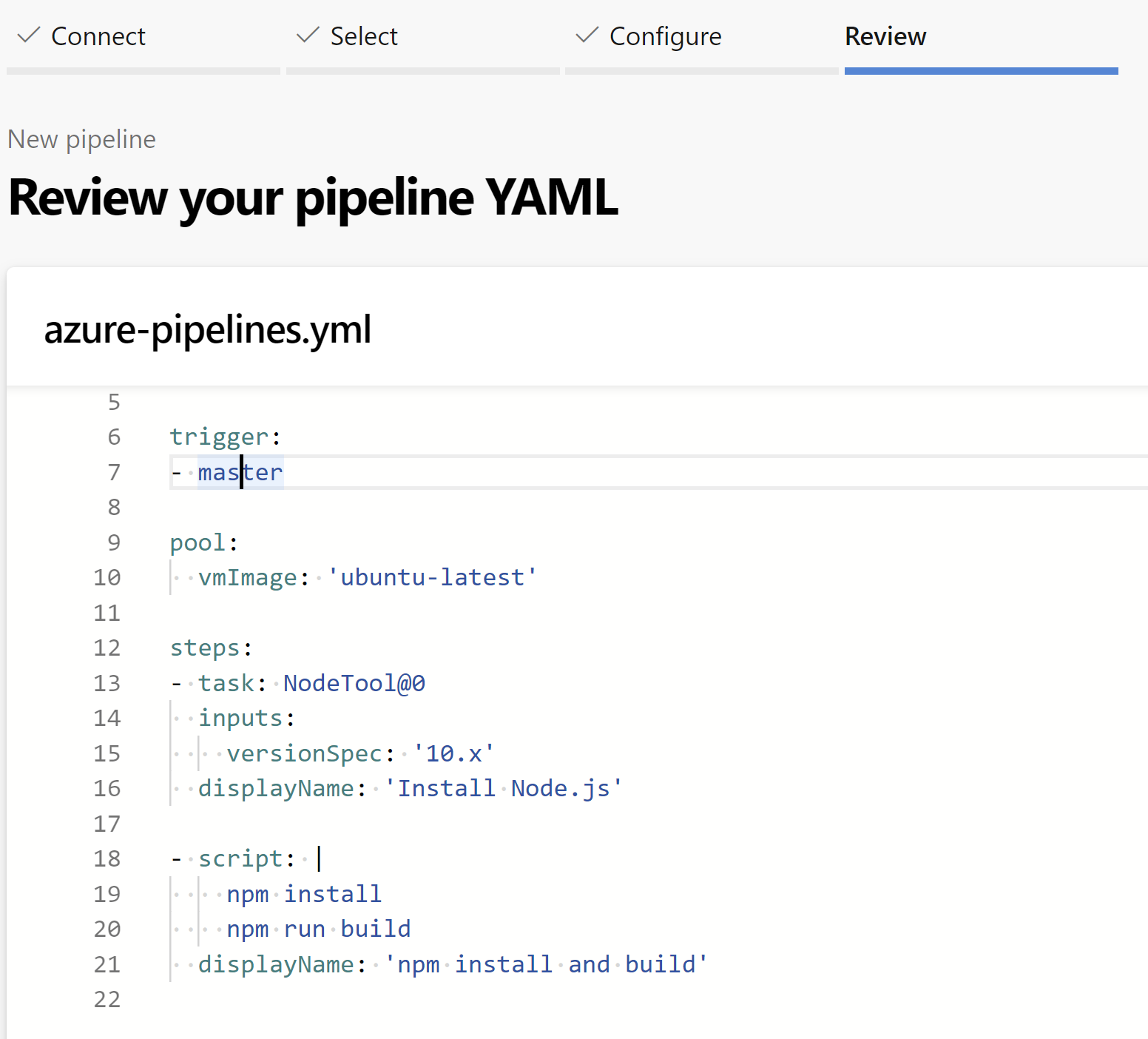

Setting up the Pipeline

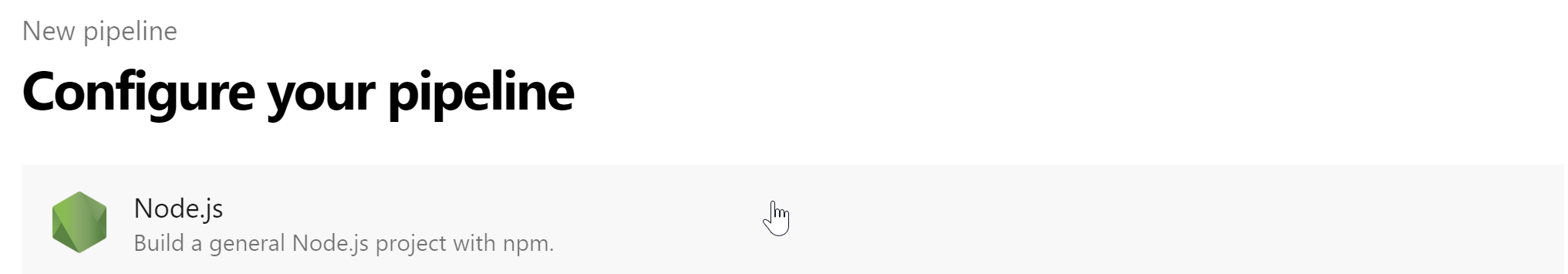

- I’m taken back to the pipelines page, where I am on the “configuration” step and can now choose what kind of pipeline I want. I choose

node.jsbecause I think that’ll be most suitable

- Hey cool, Azure DevOps creates a YAML file that has a build set up for us that is triggered on any PR and anytime we push to master. It runs

npm installandnpm build. That seems pretty spot on.

- Azure DevOps also has this nice Save & run button which will commit the YAML file back to our repo and begin the build process. So I click that to save it.

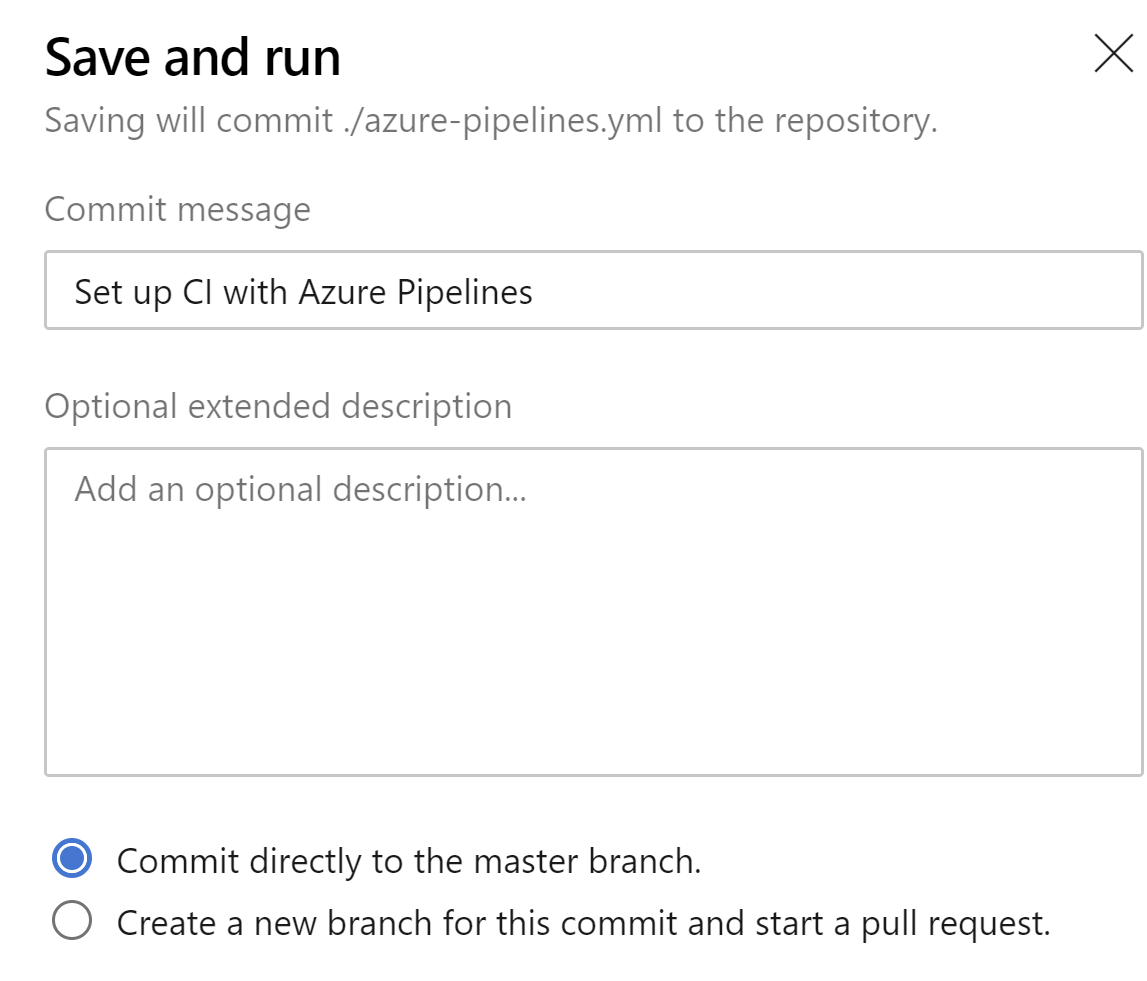

- We are given options for how to commit to the repo. I choose to commit directly to master because I live on the edge. No, kidding, but I do choose it because I see the contents and know committing to master will allow the build to kick off.

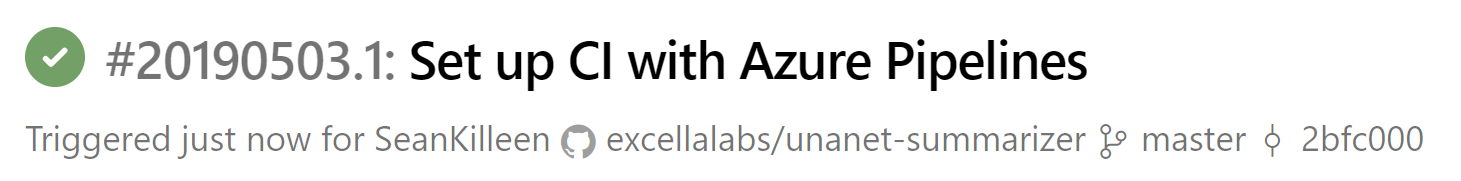

- An agent prepares itself and then runs the job. It’s a success! We’re just not doing anything with the output yet.

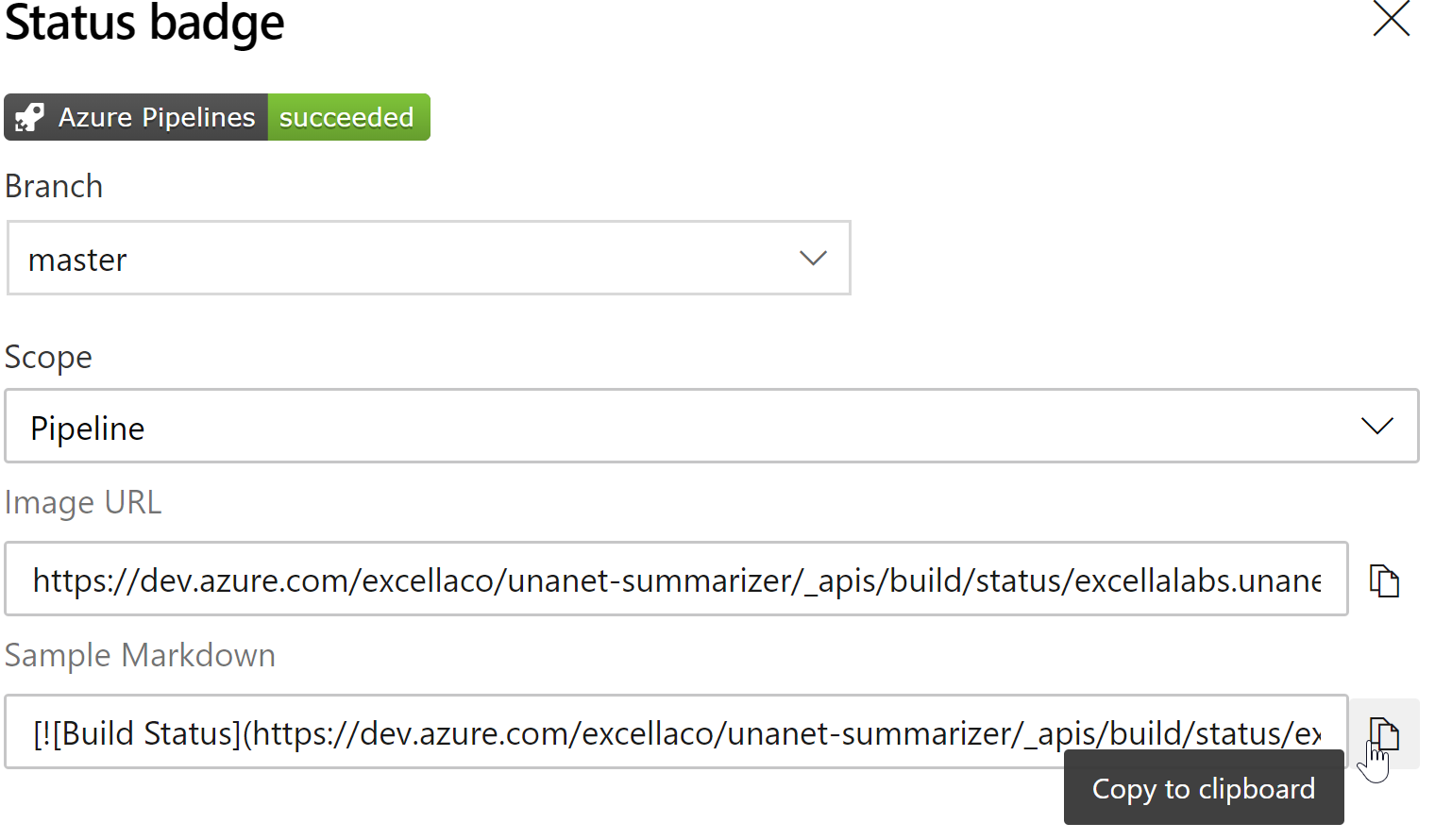

Status Badge

Next up, I’d like to set up a status badge for the builds that I can show in the README file.

-

I go to the build definition

-

In the drop-down to the right, I select

Status Badge:

- I choose the branch, and then copy the provided markdown (which is nice):

- I test that markdown here: (because why not?)

- Nice! I’ll create a PR and add that to the

README.

Outputting the distribution files

- I create a PR that adds the following to the azure pipelines file. The YAML will (I think) take the

distfolder of our build and output it, but only when the branch is the master branch. I chose thedistfolder so we wouldn’t have to deal withnode_modules, and I chose only themasterbranch because we really only will do anything with the output when it is the master branch we’re building, since that’s what we’ll release.

- task: PublishPipelineArtifact@0

displayName: Publish Pipeline Artifacts

inputs:

targetPath: $(Build.ArtifactStagingDirectory)/dist

condition: eq(variables['Build.SourceBranch'], 'refs/heads/master')

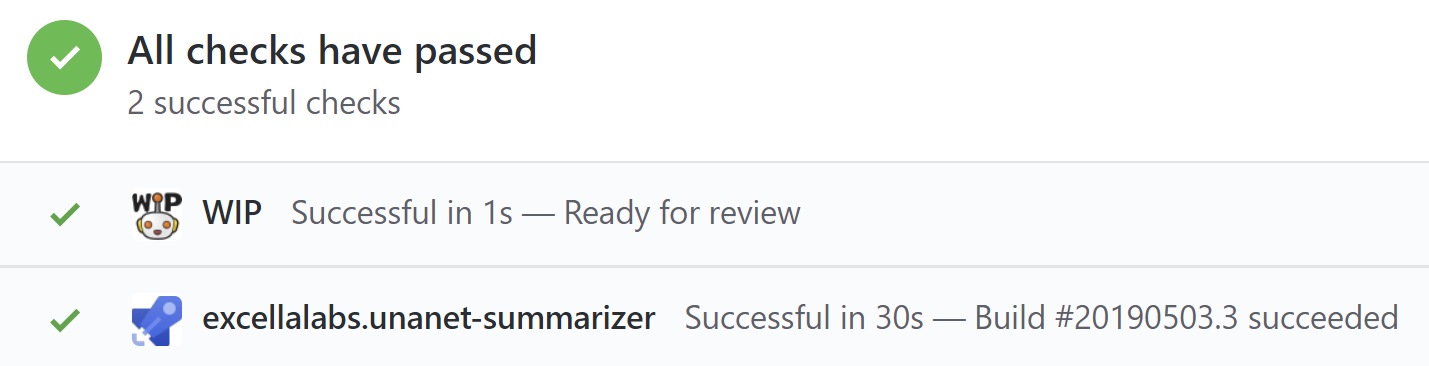

You know what. Building this PR makes me realize we never turned on the azure pipelines for PR builds within GitHub. So let’s do that.

…wait, nevermind, we don’t have to. Azure Pipelines already set that up.

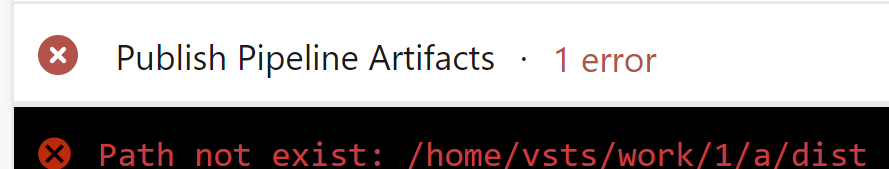

- I watch the job go through on the Azure Pipelines and it totally! ….fails. Oops, I think I picked the wrong directory maybe?

Interesting. In the build output itself I see /home/vsts/work/1/s instead of an a. Maybe I’m using the wrong build variable?

…oh, whoops. In order to publish the staging contents, we’d probably have to put something there first, wouldn’t we? So I’ll add the below in a PR:

- task: CopyFiles@2

inputs:

sourceFolder: $(Build.SourcesDirectory)/dist

contents: '**\*'

targetFolder: $(Build.ArtifactStagingDirectory)

displayName: Copy Files to Staging Directory

OK, well that was actually a little weird. It turns out that the build directories in the variable seem to be C:\agent etc. but in the Ubuntu VM it’s /home/vsts/work/1/s. So I needed to hard-code that in order to find the files. The default didn’t work. Strange.

…and when I changed to that, it still didn’t work. Azure Pipelines isn’t finding the output files.

OK hmm, all of a sudden it works and I don’t know why. I see in the logs:

Copying /home/vsts/work/1/s/dist/unanet-summarizer-release.js to /home/vsts/work/1/a/dist/unanet-summarizer-release.js

And it copied 6000 files including node_modules etc.

So i’m going to update it now to output from dist. A very interesting issue.

For some reason, this ended up being the task to do it:

- task: CopyFiles@2

inputs:

sourceFolder: '/home/vsts/work/1/s/dist' #For some reason, I think we need to hard-code this.

targetFolder: '$(Build.ArtifactStagingDirectory)'

displayName: 'Copy Files to Staging Directory'

I still don’t understand what the final change was that made it work, but this does at least make sense to me.

Onward!

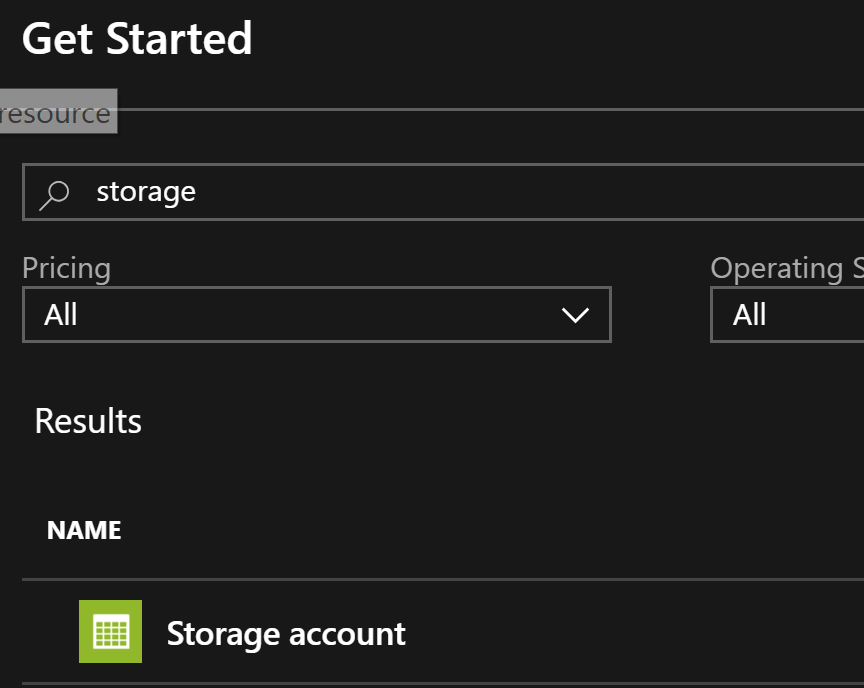

Creating the Container for Storage

NOTE: This is only one way amongst many to do this. You might want to push files to GitHub pages, Netlify, etc. – this just happened to work for me.

The next step will be to create an Azure blob and then deploy the released JS to it.

- I login to the Azure portal using my Excella account

- I navigate to the resource group we use for these things

- I click “Add” to add a resource.

- I type “storage” and select “Storage Account”

- I click “Create” on the intro screen.

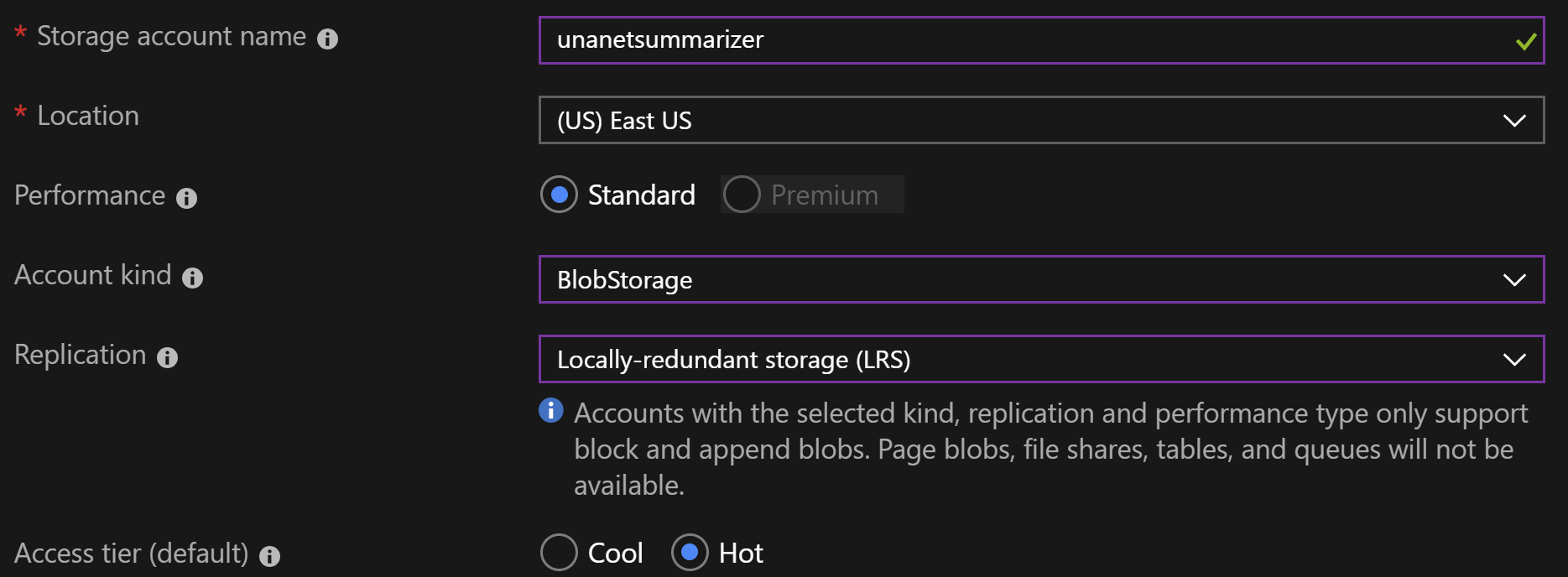

- I provide a name, region, and type for the blob storage:

- On the review screen, I click create.

- When the creation completes, I click to go to the resource.

- I don’t have any containers yet, so I click to add one:

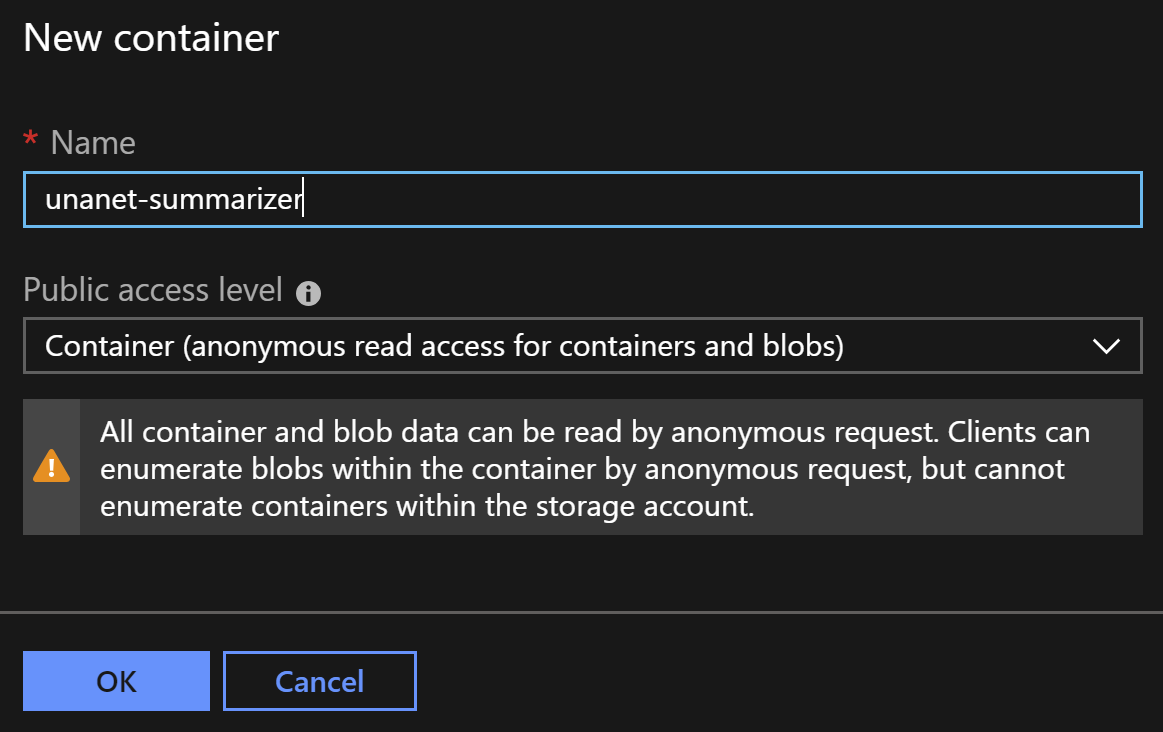

- I provide a name, and select container level anonymous read access, since our intention is explicitly to serve our scripts for the entire world to see.

- After the container is created, I click into it.

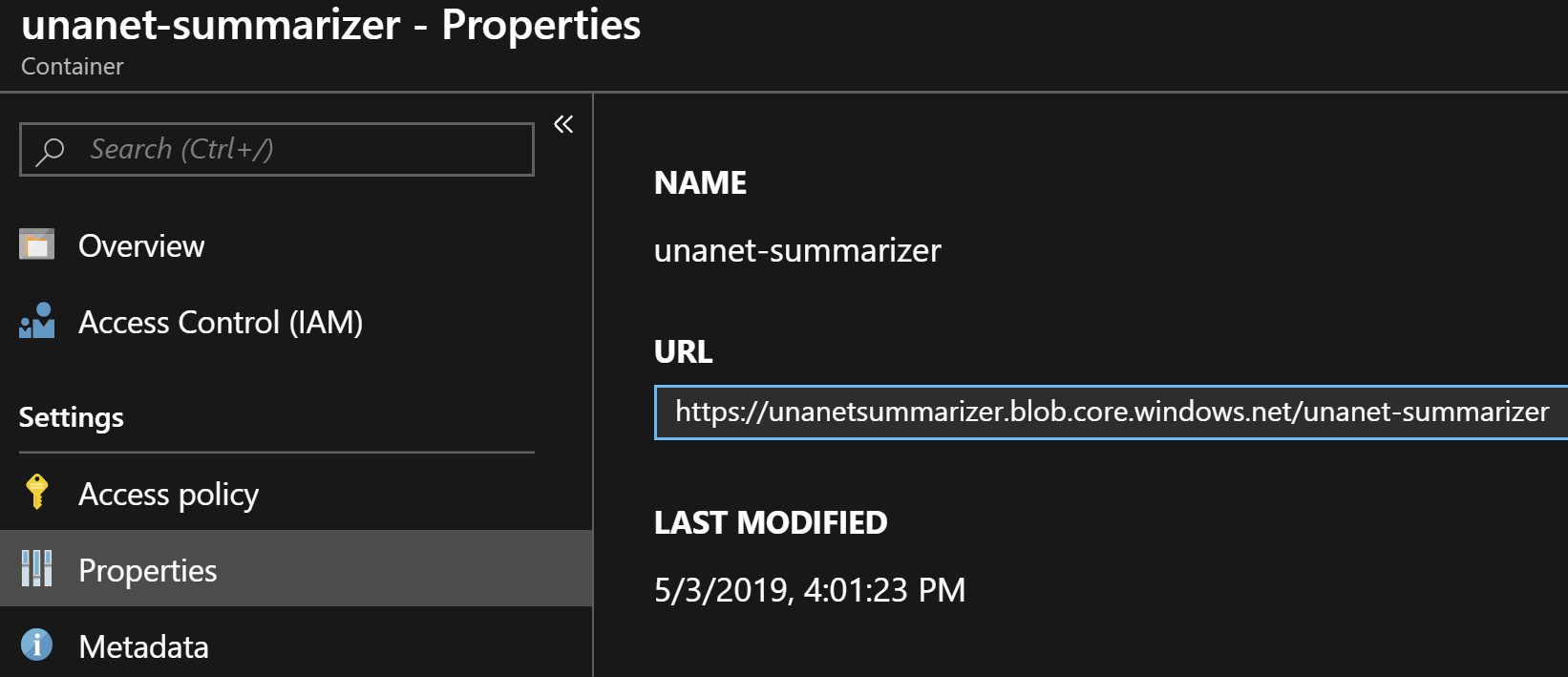

- I then click properties on the left-hand menu, and get the URL of

https://unanetsummarizer.blob.core.windows.net/unanet-summarizer:

This is where we’ll eventually deploy to.

Creating the Deployment

Speaking of, sounds like we should go create that deployment!

- Back into Azure DevOps, I choose Releases from the left-hand menu. I don’t have yet, which makes sense. I choose to create a new one.

- I’m prompted to start with a template but because we’re outputting to a blob, I think that an empty job probably makes the most sense.

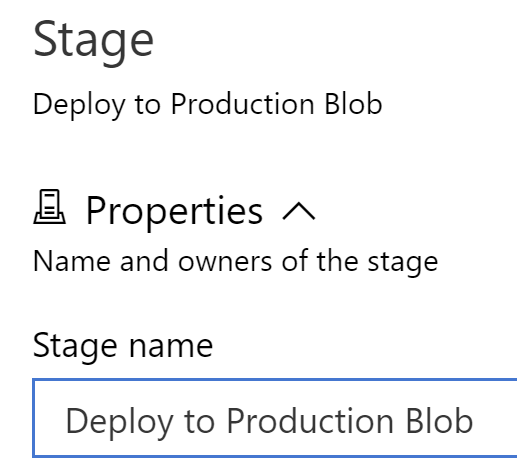

- I get a default stage (what you might do for different environments, etc.). In our case, we have just one stage so far: “Deploy to the production blob”. So I give the stage a name.

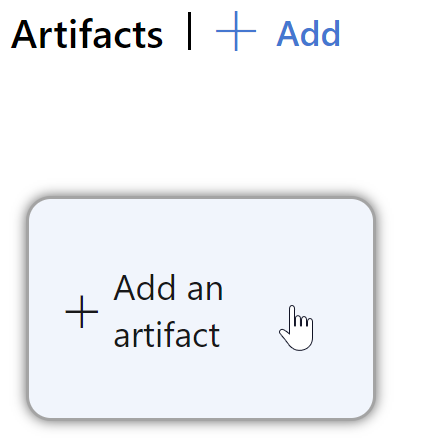

- I’m not actually pulling in any artifacts that would kick off a release yet, so I click to do that:

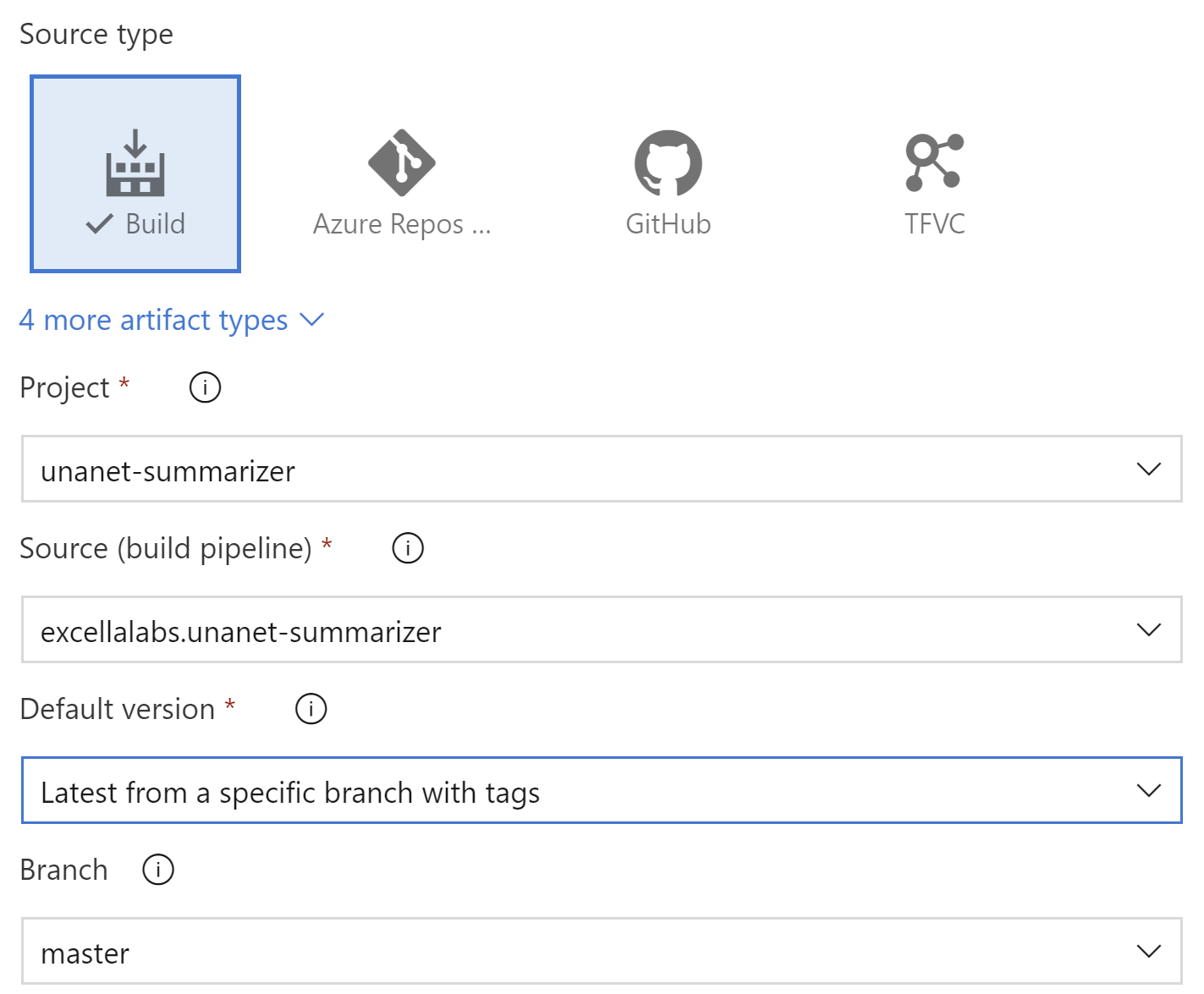

- I tell the release that I want it to use the artifacts from the latest build of the

masterbranch, and I click save:

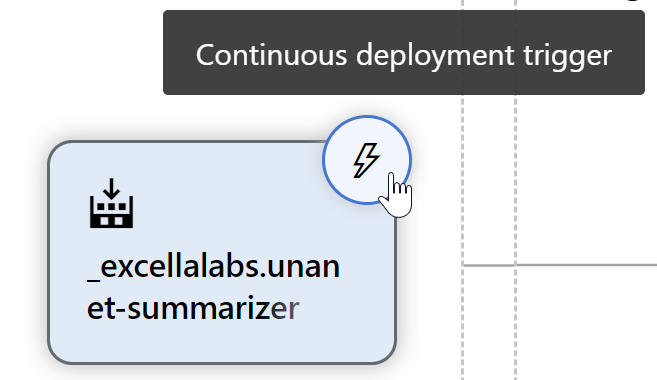

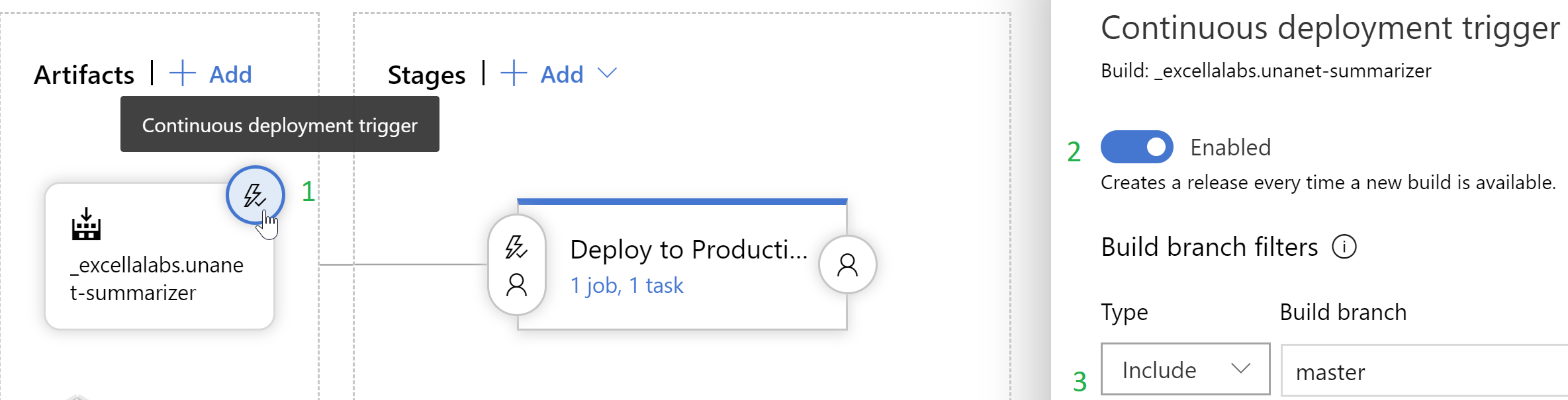

- Note the lightning bolt on the artifacts. That means that anytime a new one of these artifacts shows up, a release will be created and executed.

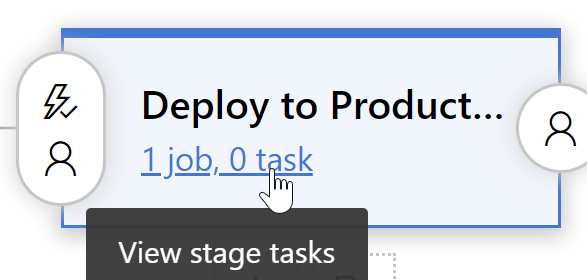

- I click to view the tasks for the stage, since we haven’t added any yet:

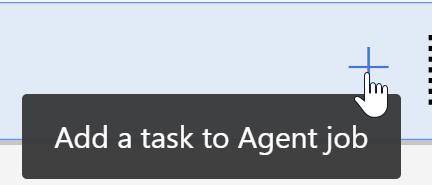

- I click to add a task to the agent job:

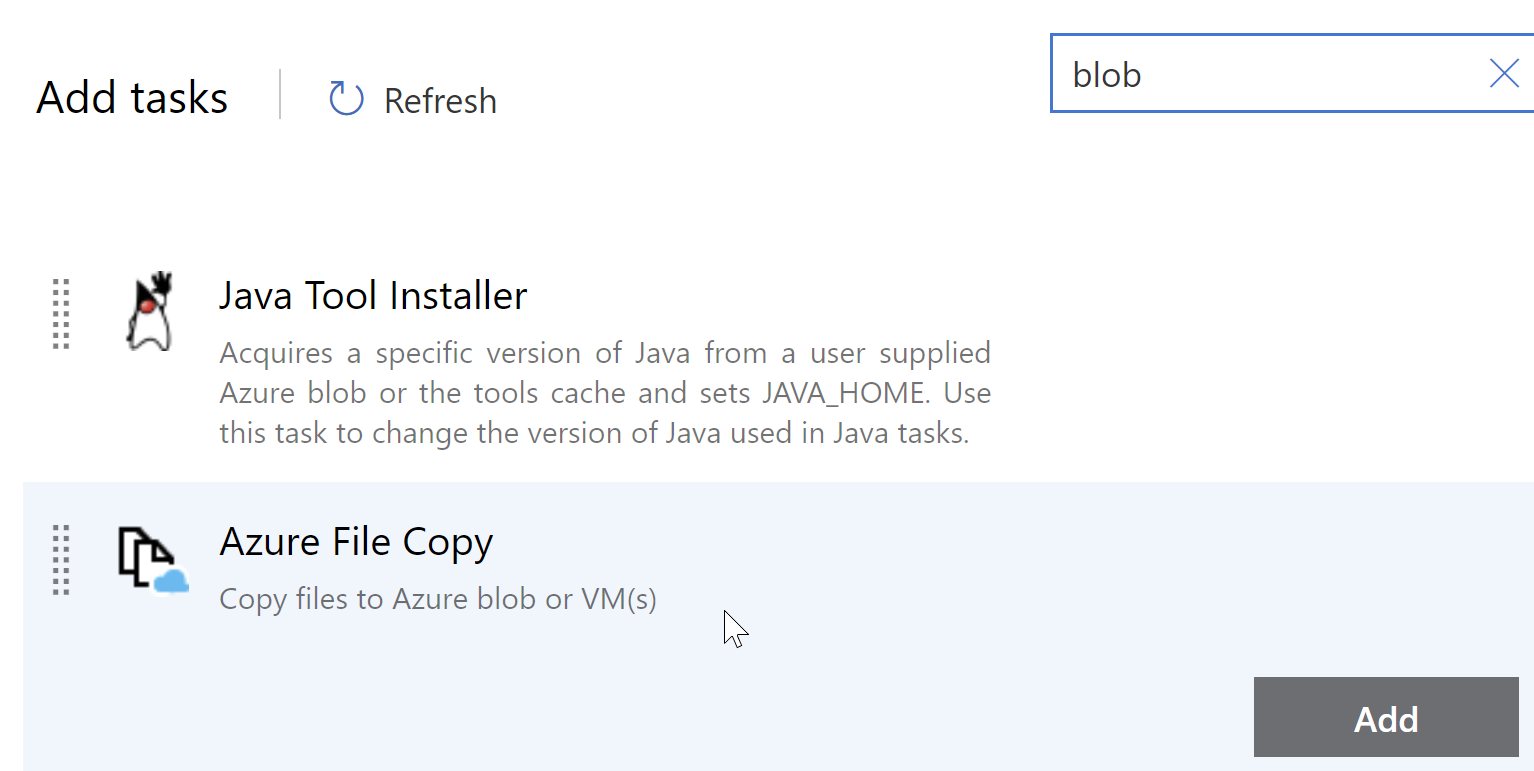

- In the tasks, list, I search for “blob” (this is literally my first time doing this), and awesomely, “Azure File Copy” comes up. I click to add it.

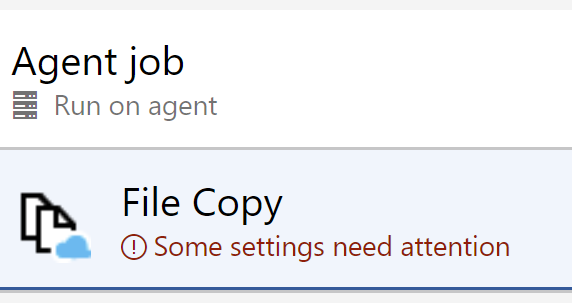

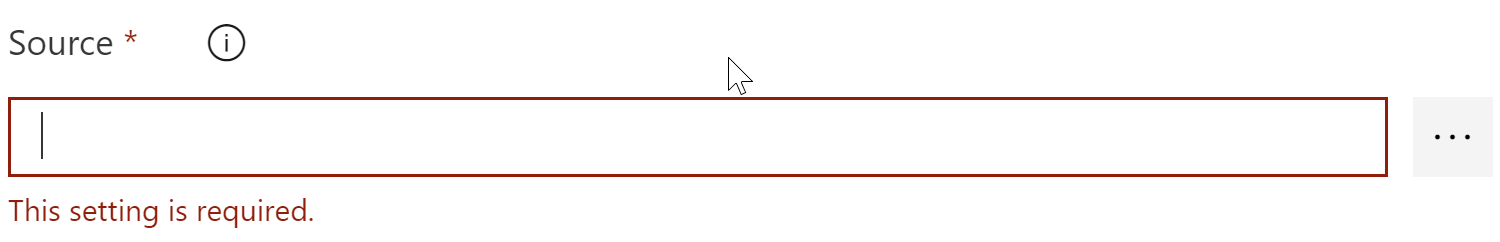

- I see that “some settings need my attention”, so I click into it:

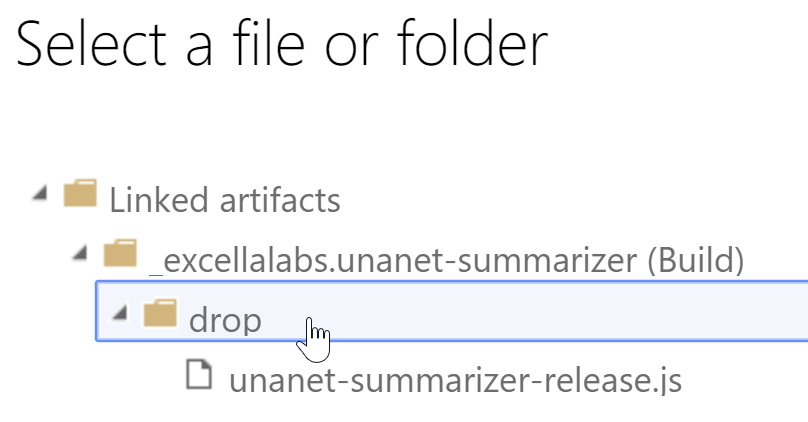

- I need to select a source. Luckily, there’s an ellipsis menu that lets me select the location based on my artifact output:

- I choose the artifact folder that I want to copy from:

- I select the subscription ID (omitting that here) and then click

Authorizeto allow azure devops to get the access it needs:

…and I get an error. Which is fair, because I’m using a company resource and don’t have full admin rights there (which I’m OK with). Normally on personal subscriptions it Just Works™️.

So, I’ll leave off here for now until my IT dept is able to unblock me.

A Note on Azure Subscription Permissions

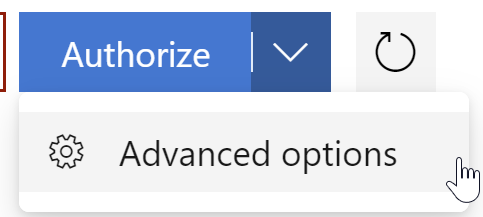

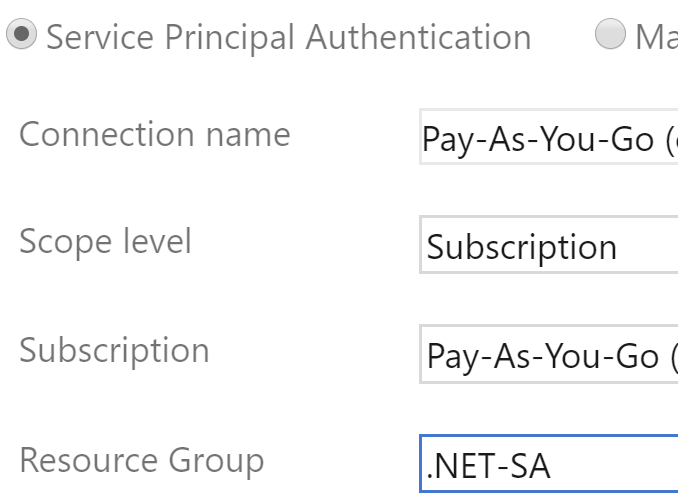

And we’re back! Fun fact: clicking that authorize button attempts to do so for a whole subscription, but if you click the advanced options:

You can select a resource group, and then it will work since I have access to the resource group:

…okay, back to our regularly scheduled show.

Selecting the Deployment Destination and Deploying

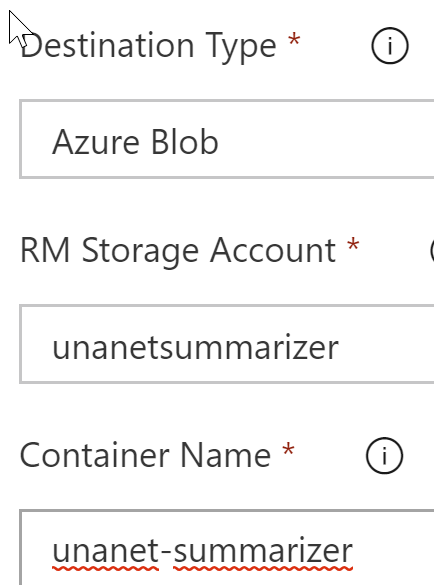

- I select the destination type and point it towards the storage account I created:

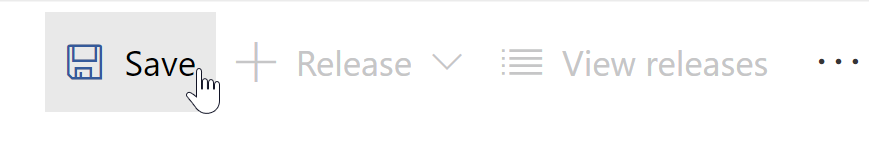

- OK, I think that’s pretty much it and I’m ready to save the release and see how this worked out.

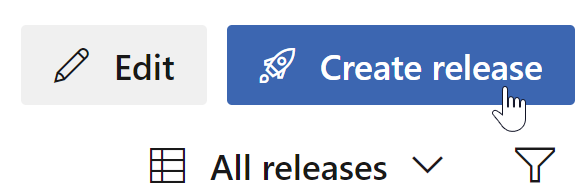

- Let’s give this a shot! I got to the releases page and click to create a release:

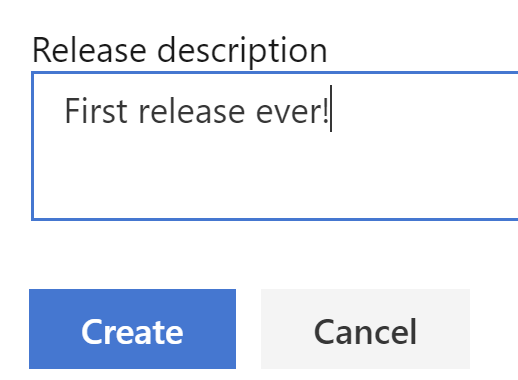

- I give the release a description, and then click

Create:

- Looks like it worked!

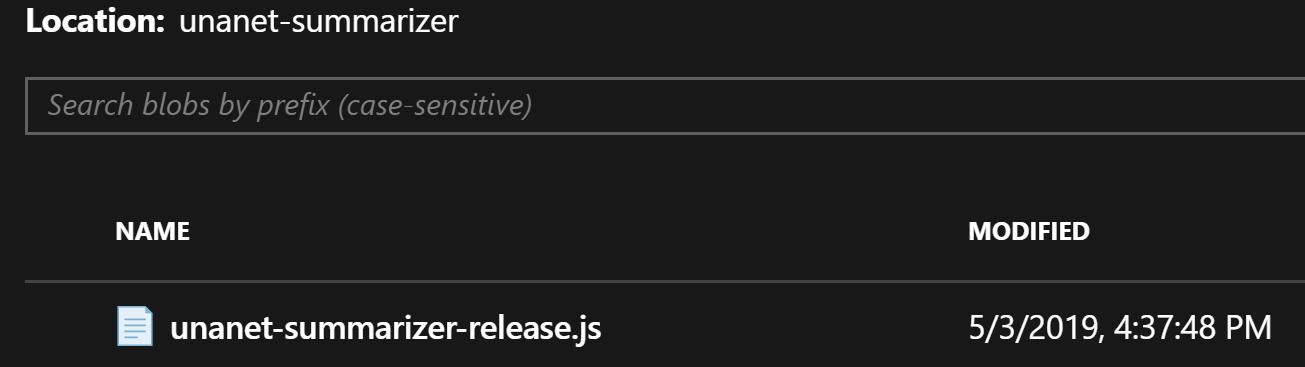

- I go back to the Azure portal to check, an lo and behold, it’s there!

- Just to check, I get the URL of the blob (https://unanetsummarizer.blob.core.windows.net/unanet-summarizer/unanet-summarizer-release.js) and i hit it in my browser. It works!

Adding a Release Badge

Now, releases to prod are cool, so I want to show them off publicly. How do I do that?

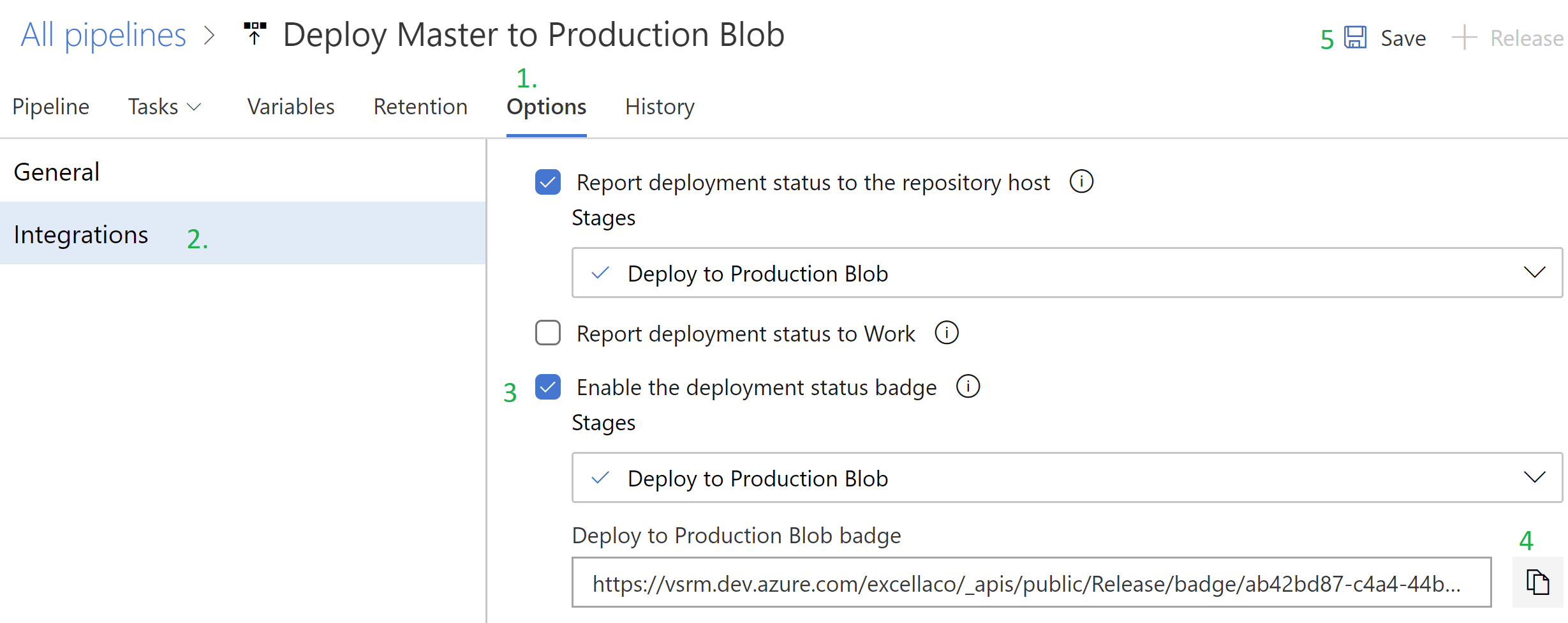

- I open the release definition in Azure DevOps. I click Options, Integrations, enable the status badge, copy the URL, and then Save the release options:

We can check it here:

Sweet! I think I’ll add it to the README as well.

Oops: Let’s actually Continuously Deploy

Oops, one last thing: I’d messed up on the continuous deployment trigger option earlier. When I said it would do so continuously, I forgot you had to explicitly enable that first (which makes sense and I appreciate.)

- I edit the release definition

- I click the lightning bolt, enable continuous deployments, and add a filter for the branch:

- I save the release.

…now it deploys automatically. For real for real.

The Results

Now I see:

- ✅ The build finishes

- ✅ The release created itself

- ✅ The release deploys the blob appropriately

- ✅ The timestamps on the blob are updated

- ✅ The status indicators on the

READMEare correct.

And with that, our work is done!

Leave a comment